ESR7 – Syed Muhammad Umair Arif

Evaluating the Quality of Experience of immersive visual systems in the operative control rooms.

The focus of this job is to define influencing factors and measure the Quality of Experience for immersive head-mounted display technologies (augmented reality, mixed reality). The purpose of defining Influencing factors is to find out how users are experiencing and what factors degrade the overall experience.

About the Project:

Augmented and mixed reality head-mounted displays have the potential to replace semi-immersive displays, such as widescreen LED displays and projectors, in control room environments to visualize information such as 3D models, maps, text, and videos. Mixed reality head-mounted displays have been widely adopted as an assisting tool for operators to interact with the blended world without visual interruption from the real world.

Illustrations by S.M.Umair Arif. All rights reserved.

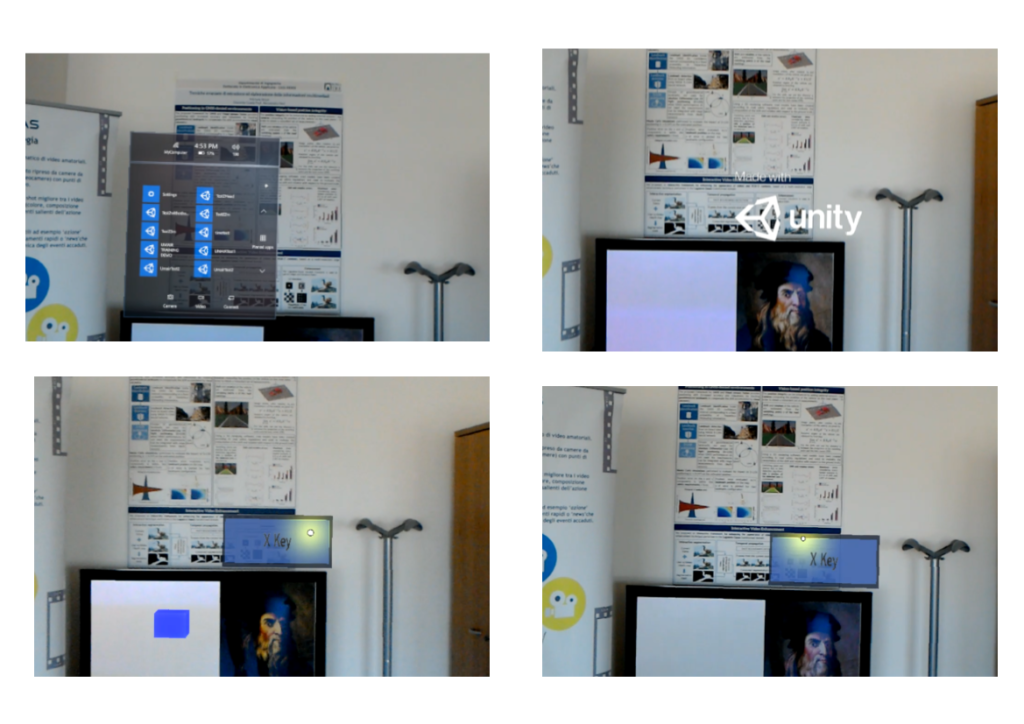

Recent research has shown that immersive visual systems improved user performance during operations while also supporting high-level human cognitive functions like alertness, attention, working memory, and visual perception. ITU-T made significant efforts to develop and enforce different evaluation standards for immersive systems in multimedia applications with passive viewing of content with minimum or no interactions. In this regard, we performed a comparative study on the traditional rendering interfaces and mixed reality which may be used for various safety-critical applications. Therefore, we designed the mixed reality application and tested it on traditional non-immersive and mixed reality interfaces. Secondly, we evaluated the spatial rendering distances to analyze visual experience in mixed reality, and we gathered user feedback on various projection distances within a mixed reality environment.

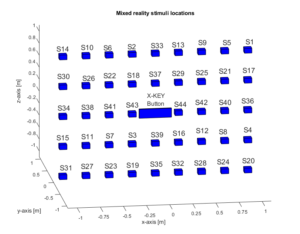

Lastly, we performed a human factor study to measure the reaction time of subjects in the mixed reality environment. We first estimated the detection accuracy through omitted, anticipated, and completed responses; then we related stimulus location, scene content, and estimated accuracy. Experimental results show that in addition to the saliency of the real scene, natural body gesture technology, system factors of the device, and limited field of view influenced human reaction time to perceive the Holographic stimuli and provide the appropriate response.

Project Milestones:

Milestone1: “Definition of system requirements in operative control rooms”

In order to complete my first milestone, we gathered the data through various questionnaires from Italian firefighters “Istituto Superiore Antincendi” experts who work in control rooms at the second ImmerSAFE Tech Day. In the second step, we developed the cross-platform application to render Mixed reality HMD and traditional 2D LED displays and collected the user preferences, and published the results at ISPA 2019 conference.

Milestone2: “Human Factors in Decision Making in the Control Room Scenarios”

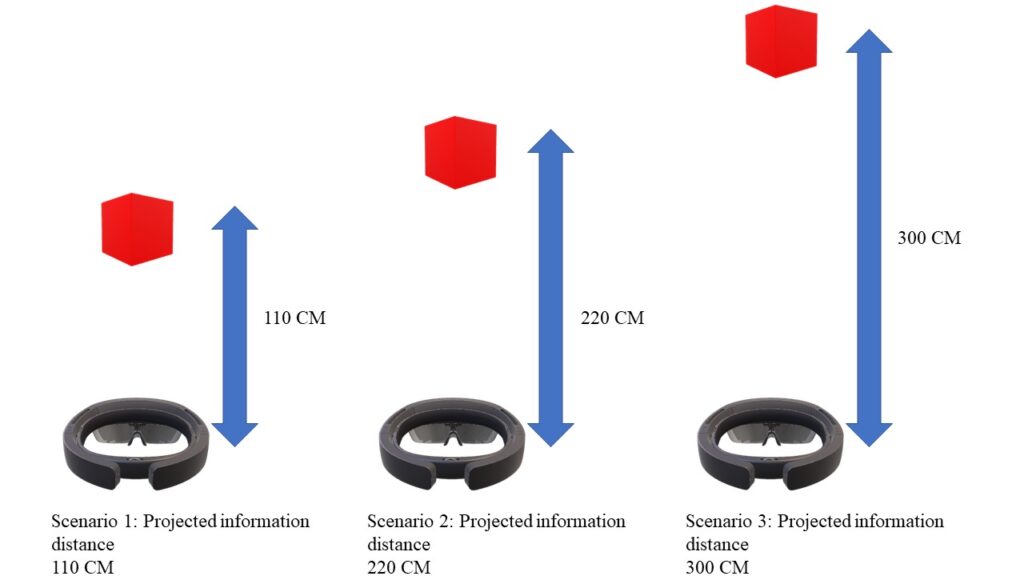

In order to complete the second milestone we divided the milestone into further substeps. In the first step, we evaluated the human reaction time on various spatial distances i.e 110CM, 220CM, and 300CM. Experimental results show 220 CM is a more suitable distance for users in order to visualize and interact with the holographic.

In the second phase, we evaluated the human reaction time in two different controlled environments with minimum and maximum visual distractions by varying the number of stimuli. In this regard, we set up laboratory-controlled environments and mixed-reality applications to render on Mixed reality headsets.

Mixed Reality Simple Reaction Time Application

The spatial location of holographic stimuli

Publications:

- S. M. U. Arif, P. Mazumdar, and F. Battisti: A Comparative Study of Rendering Devices for Safety-Critical Applications: 2019 11th International Symposium on Image and Signal Processing and Analysis (ISPA): 09/2019

- S. M. U. Arif, M. Carli, and F. Battisti: A study on human reaction time in a mixed reality environment: 2021 International Symposium on Signals, Circuits and Systems (ISSCS): 07/2021

- S. M. U. Arif, M. Brizzi, M. Carli, and F. Battisti: Human reaction time in a mixed reality environment: Frontiers in Neuroscience: 08/2022

Secondments:

Secondments were pending due to COVID-19 traveling and institutional restrictions.

About the ESR:

Umair received his Diploma of Associate Engineering in Electrical engineering with Distinction (Science Institute of Technology, Pakistan) and he pursued his Bachelor of Science in Computer Science BSCS major in computer network systems (Federal Urdu University Art Science & Technology FUUAST, Islamabad, Pakistan) and he pursued his masters in Bio Technical Systems and Technology with Distinction (Tomsk Polytechnic University, Tomsk, Russia), and received Gold Medal.

Umair started his doctoral studies at Roma Tre University, Department of Industrial, Electronic and Mechanical Engineering, COMLAB in December 2018 and successfully defended his dissertation in October 2022 and received the title of Doctor of Philosophy.

Contact Email:

umairarif88@gmail.com, syedmuhammadumair.arif@uniroma3.it

This project has received funding from the European Union’s Horizon 2020 research and innovation programme under the Marie Sklodowska-Curie grant agreement No 764951.

This project has received funding from the European Union’s Horizon 2020 research and innovation programme under the Marie Sklodowska-Curie grant agreement No 764951.